An overview of Splunk costs

Did you know that utilizing Splunk to index 5 GB of data per day would cost you more than $30,000? It’s pretty much evident that Splunk costs are extremely high, especially for small cloud businesses, albeit being one of the key players in the cloud monitoring and observability landscape,

Today, Observability has become a fundamental part of IT operations strategy. As a result, organizations deploy solutions like Splunk to gain visibility into the health of their infrastructure, applications, services, edge devices, and operations.

A core aspect of observability is building machine data pipelines from all systems that generate data to the Splunk system so that operations teams can gain meaningful insights.

However, connecting all your data sources to Splunk creates a huge volume of data streams that eventually drive up the TCO of running Splunk.

Reducing Splunk costs using FLOW

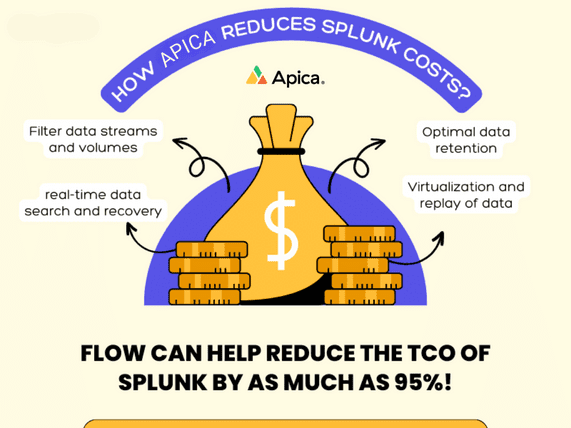

Fortunately, apica.io’s FLOW can keep these costs in check. FLOW addresses this using a unique observability pipeline model that enables:

- filtering of data streams and volumes

- routing and channeling of data to the right targets

- shaping and transforming data in transit

- optimal retention of data

- real-time data search and recovery, and

- virtualization and replay of data

With all of these capabilities combined, FLOW can help reduce the TCO of Splunk by as much as 95%.

Getting out of “ingest hell”

Organizations that have deployed Splunk have helplessly fallen into “ingest hell”. “Ingest hell” is a situation where a customer has to pay high licensing and infrastructure costs as a direct result of their ingest volumes. The more they ingest, the more they pay.

FLOW frees organizations from this “ingest hell” without the need to replace Splunk. By acting as a filter and shaper that sits in the observability pipeline of an organization, FLOW dramatically reduces the cost of licensing and running Splunk by intelligently eliminating all the redundant data streams and cutting the noise within each event log.

Through this process, FLOW reduces ingest volumes by up to 95%, directly reducing the costs associated with Splunk licenses and infrastructure.

Routing data to where it’s needed

The market’s current observability pipeline control solutions let you route your data to a data lake for low-cost, long-term storage. Data lakes are known to be very slow. However, Apica’s unique engineering innovation creates, what we like to call, data dams that can be queried, analyzed, and mined in real-time.

Besides reducing ingest traffic and serving to reduce costs, Apica’s FLOW enables organizations to route 100% of all the original observability data to a real-time data lake built on any S3-compatible object storage for maintaining compliance.

Would you like to know the cost of this real-time data lake? It won’t cost you more than the cost of object storage – a penny or two per GB, depending on your choice of object storage.

Observability data is only helpful if available to the teams and target systems that need it in real-time and on demand. FLOW, along with the InstaStore data dam, allows teams to route observability and machine data from any source to any target. With FLOW, teams can use any combination of data volume and streams between source and target systems like Splunk, S3, Snowflake, Databricks, QRadar, home-grown data lakes, DBs, Elastic, Azure, GCP, etc.

Furthermore, Apica completely frees end-users from the burden of modifying configurations at either the source or the target. FLOW is the magic wand that allows organizations to magically enhance the value of their existing observability, security, and compliance investments. With Apica’s FLOW, the end-user gains total control over their observability system, pipelines, and data. Not Splunk.

Datastream equality

Besides cost reduction, effective routing, and efficient storage, organizations can unlock faster remediation times, real-time compliance, and enhanced security.

With Apica, you’ll never need to block data streams or drop data ingestion. Regardless of how fast your data volumes grow, Apica’s Flow can ingest, filter, enhance, and store logs at an infinite scale without influencing Splunk infrastructure costs.

It performs at an endless scale with any observability data without the need to “understand” a customer’s environment.

Flow’s AL and ML capabilities make it highly intuitive and easy to use, delivering value in minutes instead of days or weeks. With Apica, Splunk admins no longer need to invest time or resources in managing SmartStore and running through its various configuration permutations.

Instead, Flow lets you treat all of your data streams with equal priority and log, store, and process all the data you need without worrying about costs or infrastructure.

Infinite storage for Splunk

FLOW’s storage layer, InstaStore, uses any object store or S3-compatible object store as its primary storage layer. This capability of using object stores as primary storage means that Flow can handle any growth in data volumes (even theoretically infinite) using simple API calls. It can retain any volume of data, whether TBs or PBs of data, precisely the same way. This capability allows you to not only use Flow to manage, refine, and unify your data streams but also as a storage sidecar for Splunk. With its real-time data forwarding and replay capabilities, you can forward any volume of data to Splunk in real time while unlocking infinite retention for that data within InstaStore.

Apica’s FLOW is the first real-time platform to bring together benefits of object storage-like scalability, one-hop lookup, faster retrieval, ease of use, identity management, lifecycle policies, data archival, and other capabilities.

For companies processing TBs of data per day on Splunk, Apica can help save millions of dollars per year purely on licensing, storage, and infrastructure terms.

How does Apica help in reducing Splunk costs?

The proliferation of data complexity and sprawl in recent years has given rise to new challenges in enterprise observability. Limited pipeline control, siloed data, and outdated monitoring methods are just a few of the factors that hinder the efforts of tech and DevOps teams. This, in turn, can negatively impact business performance.

To address these challenges, Apica’s FLOW is designed to control and streamline data. It aggregates logs from multiple sources, improves data quality, and allows for data projection to one or more destinations. Additionally, FLOW helps to reduce customer response time by enhancing the data analysis process.

In order to provide knowledge workers with an increased data value, IT and DevOps engineers require better technical capabilities. Therefore, enterprises must exercise greater control over data volumes, diverse sources, and data sophistication to improve competitiveness.

Apica helps to reduce observability costs by providing an all-in-one APM, log management, and analytics solution that offers a single source of truth for any organization’s entire IT infrastructure. This results in reduced operational overhead, as teams no longer need to manage multiple separate solutions or manually integrate them to get the necessary visibility.

Apica also provides granular cost breakdowns into resource utilization and performance metrics, enabling organizations to precisely identify areas where they can optimize their observability costs.

In a Glimpse:

- Splunk costs for cloud observability are extremely high, especially for small cloud businesses

- Apica is a unique observability pipeline model that enables the filtering of data streams and volumes, routing and channeling of data to the right targets, shaping and transforming data in transit, optimal retention of data, real-time search and recovery capabilities as well as virtualization & replay.

- Apica’s FLOW helps reduce the TCO associated with Splunk by up to 95% while freeing organizations from “Ingest Hell” without needing to replace Splunk.

- FLOW allows teams to route observability & machine data from any source system (e.g., Splunk) into any target system (e.g., S3 or Snowflake).

- It eliminates the need for admins to invest time managing SmartStore configurations due to its AI/ML capabilities which make it easy-to-use & deliver value quickly.