Amazon S3 is often the platform of choice for companies looking to store corporate data, address their ever-increasing data storage needs, and even host static websites. However, given the relatively simplistic nature of S3, you need to set up additional machine data pipelines and monitoring solutions outside of the AWS ecosystem to monitor data access and application performance. These pipelines and systems can either work as a sidecar to your existing AWS-based monitoring setup or replace it entirely.

If your IT environments use AWS S3 for storage, it is crucial to watch AWS S3 server access logs. They log all requests made to a bucket in great detail. These logs are beneficial for data security, compliance, auditing data access, and debugging multi-point failures if they occur. AWS S3 server access logs are written to an S3 bucket. Administrators can assign an optional prefix that makes it easier to locate log objects and manage them. Administrators can also delegate role-based access to these logs.

In this article, we’ll take you through how to forward AWS S3 server access logs to Apica for further analysis.

Forwarding AWS S3 server access logs to Apica

By forwarding your AWS S3 logs to Apica, you can:

- unify your storage and data access logs with logs from other systems in your IT environment

- ingest logs without any buffering at any scale

- augment your S3 logs for more relevance

- store your logs for longer durations at ZeroStorageTax with InstaStore

- forward or replay AWS S3 logs to any other target system of your choice on demand

Since we love keeping our integrations simple, we’ve built a CloudFormation template that you can configure and use to provision a stack with the Apica S3 exporter Lambda function. The S3 bucket that stores server access logs sends an event when a log file is added. When an event is triggered, the Lambda function reads and processes the access log and sends it to your Apica instance.

The following image illustrates how the Apica S3 exporter Lambda function works.

Creating the Lambda function

To create the Apica S3 exporter Lambda function, do the following:

- Download the CloudFormation template from the following URL: https://Apicacf.s3.amazonaws.com/s3-exporter/cf.yaml

- Within the downloaded CloudFormation template, configure the parameters listed in the following table with values related to your Apica instance.

| Parameter | Description |

APPNAME |

Application name – a readable name for Apica to partition logs. |

CLUSTERID |

Cluster ID – a readable name for Apica to partition logs. |

NAMESPACE |

Namespace – a readable name for Apica to partition logs. |

ApicaHOST |

IP address or hostname of the Apica server. |

INGESTTOKEN |

JWT token to securely ingest logs |

The CloudFormation template provisions a stack and deploys the Lambda function along with the permissions it needs.

Configuring an S3 trigger

After provisioning the CloudFormation stack, do the following to add and configure an S3 trigger.

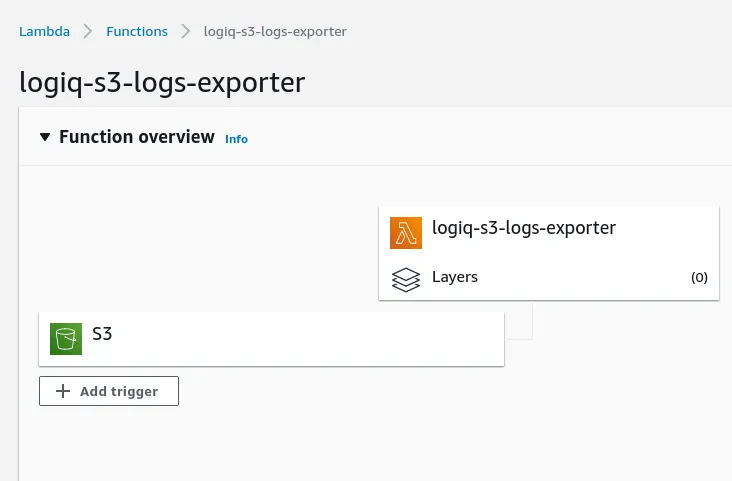

- Navigate to the AWS Lambda function (

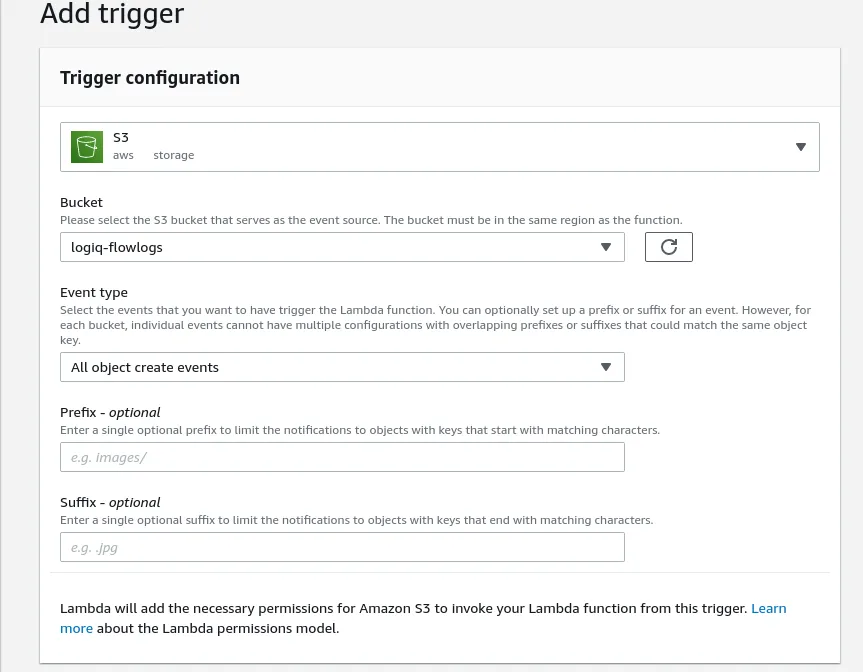

Apica-s3-logs-exporter) to add an S3 trigger. - On the Add trigger page, select S3.

- Next, select the Bucket you’d like to forward logs from.

- Optionally, add a Prefix to make it simpler for you to locate the log objects.

Once this configuration is complete, any new log files added to the access log S3 bucket will be streamed to the Apica cluster.